B and B week 6/15- 6/22

One of the main improvements to my platform creation algorithm is that I realized that the platforms were being generated the same distance away from each other. This was good enough for showing that the algorithm read melody, but in terms of rhythm it wasn’t good enough. So I had to figure out how to place the platforms based on where they would correspond to the time in the song. The problem that I had was that the platforms were all being generated at start, all at once.

The solution I found was that in my PreprocessAudio() function, it simulates time moving in the song by analyzing the amount of samples in the song and loading each chunk of data. The amount of time that moves through the song is always the same, so I used this fact to increment a position float every simulated frame by a small amount. This would allow the platforms to be created in time with the music as intended.

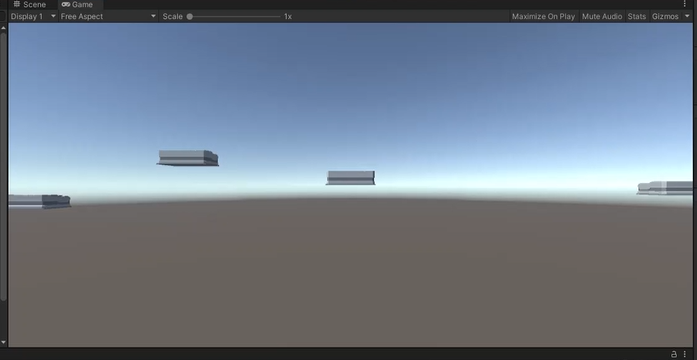

I now had platforms that were created with varying amounts of distance from each other, but it was hard to truly see how the song influenced the platforms themselves. In order to actually track this to learn how much progress I have made so far, I needed a way to follow the platforms along with the music. So I decided for demo purposes to create a moving camera that focuses to each platform that correlates to the moment in the song it is synced with. This works that through the update, a timer is updated every frame. If the timer reaches one of the platform’s initialization times, it shifts to that new platform’s position.

void MoveCamera()

{

timer += Time.deltaTime;

if (peakTrackingIndex < spectralFluxSamples.Count)

{

if (timer > spectralFluxSamples[peakTrackingIndex].Time)

{

if (spectralFluxSamples[peakTrackingIndex].IsPeak)

{

if (cameraTransformsIndex < platformPlacer.CameraTransforms.Count)

{

_camera.transform.position = platformPlacer.CameraTransforms[cameraTransformsIndex];

cameraTransformsIndex++;

}

}

peakTrackingIndex++;

}

}

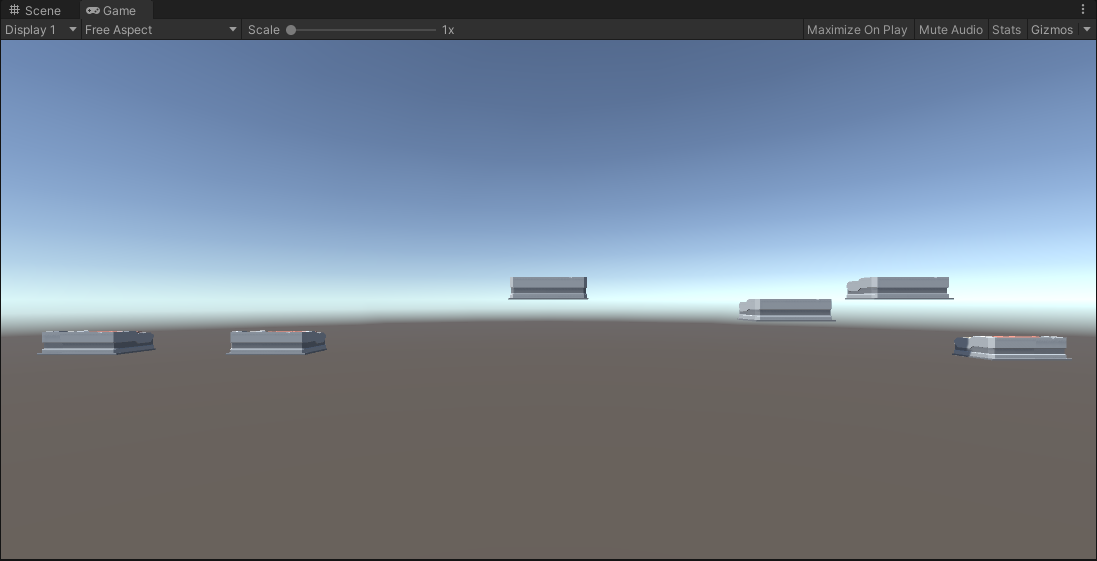

}Through this change I realized that the platforms were being created too often. I already had a way of influencing this however, and that was through the threshold multiplier value, which I adjusted until my platforms were starting to correlate to the song more accurately than before. Another large helpful contributing factor was changing how large of a range of frequencies I used to calculate the spectral flux. So instead of using every frequency to help calculate the spectral flux needed to find the beats, I clamped the range to a smaller range of frequencies I feel constitute the melody in order to get a more precise beat detection system. With all of these changes, as well as the new camera, this is how my current progress looks.

When determining what to do next, I go back to my schedule and originally my plan was to “Start with using the beat to place platforms that sync with the rhythm”, as I didn’t believe that I was going to be at this point, but I am surprisingly ahead of schedule. Due to this, I have shifted gears and changed my plan to actually start to polish on the rhythm of the song being more accurate to the music. While songs that are more bare like the example above work pretty well, more involved songs with a beat and more defined harmony begins to lose the platform accuracy, and too many platforms are created. This is my focus for the next week, as well as other smaller polishes, for instance destroying platforms that spawn on top of another platform to create a less janky experience.